Building Elder Signs Cluster - Part 3

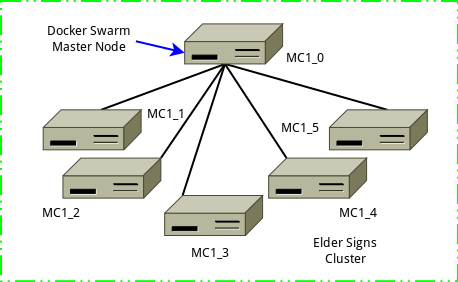

This is the fourth post in the continued effort to turn six Odroid-MC1 Solos into a PXE booted computer cluster on which to engage in further FOSS hijinks. The focus of this post will be getting a Docker Swarm set up.

Effectively we will be initializing the cluster of docker engines in swarm mode, adding nodes to the cluster.

Overview

The Docker Swarm master machine (MC1_0) will be the swarm manager and node on which the commands to run the swarm.

- Assumption, the following ports are open between all cluster nodes(May need modification according to specifics of a particular setup.)

- TCP port 2377 for cluster management communications

- TCP and UDP port 7946 for communication among nodes

- UDP port 4789 for overlay network traffic

- IPSEC protocol 50 (ESP) is allowed.

Creating the Swarm

On the Docker Swarm Master Node

- Connect to your Swarm master node via ssh. In my set up this is host MC1_0, and done by

ssh mc1_0 - On minion, make bootstrap directory,

docker swarm init --advertise-addr 192.168.1.180This should result in a display that looks similar to the following:

$ docker swarm init --advertise-addr 192.168.1.180

Swarm initialized: current node (cb6hdtnkt3e1xxe9jdoqu4190) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-197ovqeh80s5yqyarysgk4w3spw7djscv0r2r5ub1sy1wiaaz0-1nmj5ptmfftfzelnov701kbbg 192.168.1.180:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

$Add Nodes to Swarm Using Salt

Make note of the docker swarm join command out put on the master node in the previous step. We will use this command to join the remaining nodes to the swarm cluster by issuing this command from my salt master node. You could of course ssh to each node and run the command as well.

- Form the Salt master node issue the following command against all your swarm worker nodes, in my case the command is

sudo salt -L mc1_1,mc1_2,mc1_3,mc1_4,mc1_5 cmd.run 'docker swarm join --token SWMTKN-1-197ovqeh80s5yqyarysgk4w3spw7djscv0r2r5ub1sy1wiaaz0-1nmj5ptmfftfzelnov701kbbg 192.168.1.180:2377'. The output returned should look similar to this:

$ sudo salt -L mc1_1,mc1_2,mc1_3,mc1_4,mc1_5 cmd.run 'docker swarm join --token SWMTKN-1-197ovqeh80s5yqyarysgk4w3spw7djscv0r2r5ub1sy1wiaaz0-1nmj5ptmfftfzelnov701kbbg 192.168.100.180:2377'

mc1_4:

This node joined a swarm as a worker.

mc1_5:

This node joined a swarm as a worker.

mc1_3:

This node joined a swarm as a worker.

mc1_2:

This node joined a swarm as a worker.

mc1_1:

This node joined a swarm as a worker.

$ Check Swarm Cluster Setup

Issue the following command to make sure the Docker Swarm has been created as expected, docker info|grep -A6 Swarm. The number of nodes returned should be the sum of the manager and all worker nodes. The output in my case, when run on the Docker Swarm master node, looks like this:

$ docker info|grep -A6 Swarm

Swarm: active

NodeID: cb6hdtnkt3e1xxe9jdoqu4190

Is Manager: true

ClusterID: jx6d2y24afk1lnq7b56blo9a2

Managers: 1

Nodes: 6

Default Address Pool: 10.0.0.0/8

$For more informations about the nodes, issue this command, docker node ls. When run on my master node, the output looks like this:

$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

cb6hdtnkt3e1xxe9jdoqu4190 * mc1_0 Ready Active Leader 18.09.9

xw7zleh7wh6xkbpgauj0fq22b mc1_1 Ready Active 18.09.9

l7hddcr4j67tez5a4w72hgjcr mc1_2 Ready Active 18.09.9

r6aur20xvw8gfgexltg95a8mp mc1_3 Ready Active 18.09.9

if08iazchximrgzlr84e5gcvo mc1_4 Ready Active 18.09.9

3t76cq2ailq6gycvsiesensws mc1_5 Ready Active 18.09.9

$Testing the Swarm

With a fully formed Docker Swarm cluster, the next thing to do is test that it is working as expected. To do this we will need to deploy a service to the swarm. And to make things easier we will follow the steps outlined in the swarm documentation.

Deploy a Service to the Swarm

On the cluster master node, issue the following command:

$ docker service create --replicas 1 --name helloworld alpine ping docker.com

ypjul544j9p44t516jdf9shu9

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service converged

$ Trust but verify using the following command docker service ls. The output for me looked like this:

$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

ypjul544j9p4 helloworld replicated 1/1 alpine:latest

$ Service Inspection

Using the ID of the service deployed on the swarm from above, issue the following command docker service inspect --pretty <SERVICE-ID>.

$ docker service inspect --pretty ypjul544j9p4

ID: ypjul544j9p44t516jdf9shu9

Name: helloworld

Service Mode: Replicated

Replicas: 1

Placement:

UpdateConfig:

Parallelism: 1

On failure: pause

Monitoring Period: 5s

Max failure ratio: 0

Update order: stop-first

RollbackConfig:

Parallelism: 1

On failure: pause

Monitoring Period: 5s

Max failure ratio: 0

Rollback order: stop-first

ContainerSpec:

Image: alpine:latest@sha256:ab00606a42621fb68f2ed6ad3c88be54397f981a7b70a79db3d1172b11c4367d

Args: ping docker.com

Init: false

Resources:

Endpoint Mode: vip

$ Where is Service Running

Find out where the service is running using this command, docker service ps <SERVICE-ID>.

$ docker service ps helloworld

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

9lekqxzonhzt helloworld.1 alpine:latest mc1_0 Running Running 2 hours ago

$ Scale Service in the Swarm

Increase the number of services from on to six using the following command, docker service scale <SERVICE-ID>=<NUMBER-OF-TASKS>.

$ docker service scale helloworld=6

helloworld scaled to 6

overall progress: 6 out of 6 tasks

1/6: running [==================================================>]

2/6: running [==================================================>]

3/6: running [==================================================>]

4/6: running [==================================================>]

5/6: running [==================================================>]

6/6: running [==================================================>]

verify: Service converged

$ Where Are the Services Running

Find out where the service is running using this command, docker service ps <SERVICE-ID>.

$ docker service ps helloworld

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

9lekqxzonhzt helloworld.1 alpine:latest mc1_0 Running Running 2 hours ago

xlu631zmyuk1 helloworld.2 alpine:latest mc1_4 Running Running 6 minutes ago

r8gb27qu5a4n helloworld.3 alpine:latest mc1_5 Running Running 6 minutes ago

y02q65191g4i helloworld.4 alpine:latest mc1_1 Running Running 5 minutes ago

8tsocg24ihce helloworld.5 alpine:latest mc1_3 Running Running 5 minutes ago

0rt4gz1l09r7 helloworld.6 alpine:latest mc1_2 Running Running 6 minutes ago

$ Clean Up Services From the Swarm

Clean up the test service from the swarm cluster nodes with the following command, docker service rm <SERVICE-ID>.

$ docker service rm helloworld

helloworld

$ Is the Cluster Clean

Check the services are gone from the swarm using docker service ls.

$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

$Next Steps

- Set up Portainer and test functionality.

Enough for now, see you next post.